Credit losses are forecasted to increase 7.5% in 2026. Your probability of default modeling must catch deteriorating credits before they default, cover the 90% of counterparties that rating agencies don’t touch, and satisfy IFRS 9/CECL auditors with a transparent, validated methodology.

Internal models excel at portfolio-specific loss patterns but struggle with cross-institution benchmarking. Traditional rating agencies focus on public issuers and large corporations, leaving mid-market and private credit exposures without external validation. Manual assessments fail regulatory validation and don’t scale.

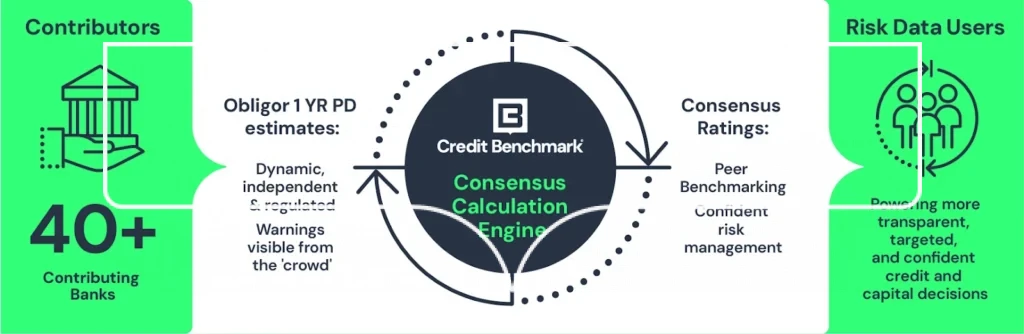

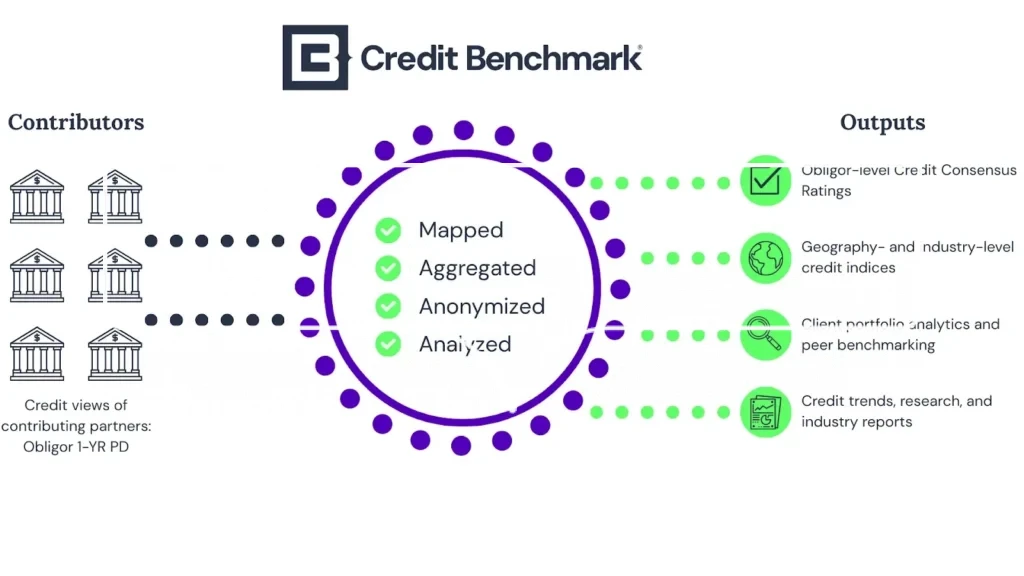

The complement: aggregated credit consensus views from 40+ global banks that validate and strengthen internal models. Real risk assessments from institutions with actual exposure, updated weekly, covering 120,000+ entities. Implementation in 90 days, not two years.

Here’s why and how leading institutions are embedding consensus data into PD frameworks.

Credit losses are forecasted to increase 7.5% in 2026. Your probability of default modeling must catch deteriorating credits before they default, cover the 90% of counterparties that rating agencies don’t touch, and satisfy IFRS 9/CECL auditors with a transparent, validated methodology.

Internal models excel at portfolio-specific loss patterns but struggle with cross-institution benchmarking. Traditional rating agencies focus on public issuers and large corporations, leaving mid-market and private credit exposures without external validation. Manual assessments fail regulatory validation and don’t scale.

The complement: aggregated credit consensus views from 40+ global banks that validate and strengthen internal models. Real risk assessments from institutions with actual exposure, updated weekly, covering 120,000+ entities. Implementation in 90 days, not two years.

Here’s why and how leading institutions are embedding consensus data into PD frameworks.

Why Financial Institutions Need Consensus Data in Their Probability of Default Model

Consensus-based PD modeling leverages the collective default experience and real-time credit assessments of major global banks to fill gaps that internal models and agency ratings can’t address.

This isn’t about replacing analysts or abandoning internal models. It’s about providing validated, peer-driven intelligence so credit teams focus on judgment calls that matter, not gathering data that already exists elsewhere.

The table below illustrates how consensus data strengthens your stack of credit risk solutions:

| Validation Approach | What it Validates | Best For | Update Frequency | Regulatory Acceptance |

|---|---|---|---|---|

| Internal Backtesting | Model performance on your portfolio | Portfolio-specific calibration | Annual | Required but insufficient alone |

| Agency Ratings | Standardized external view | Public issuer benchmarking | Quarterly | Established but limited coverage |

| Consensus Data | Peer institution alignment | Unrated and rated entity validation | Weekly | Emerging standard for peer benchmarking |

Coverage where you actually need it

Traditional rating agencies focus their coverage on public issuers and large corporations. Mid-market borrowers, private equity portfolio companies, and fund structures comprising 70-80% of institutional portfolios, lack external ratings.

Traditional credit data providers offer essential public company coverage, but can’t economically rate hundreds of private entities in your portfolio.

Consensus data extends validated credit views to 120,000+ entities. More strategically, over 90% of covered entities lack equivalent S&P/Moody’s/Fitch ratings—precisely where portfolio blind spots exist.

The private company emphasis proves valuable for unrated subsidiaries, middle-market borrowers, PE portfolio companies, and fund finance counterparties that analysts struggle to assess using traditional sources.

Weekly updates that catch deterioration early

Weekly updates based on one million monthly risk observations capture sectoral stress weeks before quarterly agency reviews. Contributing banks adjust internal views continuously for portfolio management—these assessments can surface deterioration 6-8 months ahead of formal downgrades.

By the time agencies downgrade a borrower, markets have repriced the risk. Annual manual reviews lag even further. Weekly consensus updates provide the same early warning signals major banks use to manage their own portfolios.

Aligned incentives strengthen validation

Rating agencies provide valuable standardized assessments. Consensus data adds a different perspective: credit views from banks managing real balance sheet risk. Contributing institutions aren’t paid for ratings—they’re managing actual exposure.

When consensus views align with agency ratings, it validates your assessment. When they diverge, it prompts investigation: Have contributing banks identified risks agencies haven’t captured yet, or do agency analysts see structural factors banks are missing? Both scenarios strengthen credit decisions.

Regulatory transparency that passes audits

BCBS 239 and ECB TRIM guidelines increasingly require benchmarking internal PD estimates against external references—a requirement that intensifies under Basel IV’s 72.5% output floor and IFRS 9’s forward-looking provisions.

Consensus data from 40+ regulated institutions, each operating under approved Basel frameworks, provides the independent validation that supervisors expect.

You’re demonstrating alignment with collective credit views of peer institutions under identical regulatory oversight, not defending a single vendor’s proprietary methodology.

The Practical Roadmap to a Functioning PD Model Using Consensus Data

Building a functioning PD model using consensus data requires four phases: assessment, integration, validation, and operationalization. Most institutions complete implementation within 60-90 days.

Phase 1: Map consensus data to existing validation gaps

Before integration, quantify specific validation gaps:

- Coverage gaps: Which portfolio segments lack external benchmarks? The prevalence of private credit and other non-publicly traded assets often means a significant portion of institutional portfolios are unrated by traditional external credit rating agencies. These gaps are particularly pronounced in private equity holdings, mid-market corporates, and fund structures.

- Calibration questions: Where did supervisors challenge your internal PD estimates in your last ICAAP or CCAR review? Document-specific obligor categories flagged for lacking peer validation.

- Timing mismatches: Which sectors deteriorated faster than quarterly model updates captured? Pull 2020-2023 defaults—identify where internal models or agency ratings failed to predict deterioration early enough for corrective action.

These gaps define your validation priorities.

Phase 2: Define your requirements

With gaps quantified, specify what your PD framework must deliver.

Coverage requirements: Be precise. “We need PD estimates for 800 mid-market European manufacturing companies” is actionable. “We need better data” is not. Document exact entity count, geographies, and industries where reliable PD estimates don’t exist.

Update frequency: Lending to volatile sectors or operating in fast-moving markets requires weekly updates to catch deterioration before it becomes default. Credit Benchmark refreshes weekly based on approximately one million risk observations monthly from contributing banks.

Transparency requirements: What methodology documentation do auditors require? Some institutions need detailed white papers explaining model mechanics. Others need proof that the data source is credible and validated by regulators. Know requirements before vendor discussions.

Budget and timeline: If your next IFRS 9 audit occurs in six months, you need implementation and validation to be complete in four. Be realistic about constraints.

Phase 3: Embed consensus data as a validation layer

Consensus data doesn’t replace existing infrastructure—it validates and extends it:

- For loan origination: Analysts continue using internal models but cross-check consensus PD estimates before final approval. Divergences exceeding 100bps trigger investigation.

- For model validation: Consensus term structures provide the external benchmark that supervisors require for confirming internal calibration aligns with market views.

- For portfolio monitoring: Weekly consensus updates supplement quarterly agency reviews, catching migration between formal rating cycles.

Integration paths depend on workflow complexity:

- Regional banks with 50 analysts might start with web application and Excel add-in. Analysts pull consensus data directly into review spreadsheets, calculate expected losses using existing models, and access trend analysis for individual companies.

- Global institutions processing thousands of monthly credit decisions implement the Enterprise API. This provides programmatic access to full datasets, enabling automated PD updates in loan origination systems, risk platforms, and decision engines.

- For batch processing supporting compliance and audit purposes, flat file downloads load complete datasets into risk data warehouses on your schedule.

Phase 4: Calibrate and validate

Coverage addresses the ‘what to rate’ problem. Transition matrices address the ‘how it migrates’ problem of the IFRS 9 and CECL mandate.

Map consensus ratings to your internal scale: Credit Benchmark provides credit ratings on a standardized scale. Map these to internal rating buckets and corresponding PD estimates. A consensus rating of “bb” might map to your internal “Medium Risk” category with 3% PD.

Apply transition matrices for compliance: IFRS 9 and CECL require modeling how credits migrate over time to calculate lifetime expected losses.

Credit Benchmark’s transition matrices aggregate historical patterns from 40+ banks, showing the probability that a BB-rated entity migrates to B, BBB, or defaults over 1, 2, 3+ year horizons.

For each borrower:

- Take the current consensus rating

- Use a transition matrix to project probability distributions of future ratings over the loan lifetime

- Calculate expected loss at each future point

- Discount to present value for lifetime ECL

This satisfies regulatory requirements for forward-looking loss estimation while leveraging the collective default experience of major global banks.

Backtest against historical defaults: Apply consensus data alongside existing methodology to the past three years. The goal isn’t proving consensus data is always right—it’s understanding where it identifies risks your process missed and where your proprietary insights captured factors consensus views didn’t yet reflect. Both strengthen your overall framework.

Run parallel testing: For 60-90 days, generate PD estimates and ECL calculations using both existing methodology and consensus data. Look for significant divergences and understand why. Sometimes internal models have valuable proprietary insights. More often, consensus data catches risks that manual processes missed.

Prepare audit documentation: Auditors need evidence consensus data is reliable and appropriate for your use case. Prepare documentation showing:

- Methodology and contributing institutions

- How data is validated by regulators overseeing contributing banks

- Backtesting results demonstrating predictive accuracy

- Mapping logic from consensus ratings to internal scales

- Parallel testing outcomes showing improvements over existing methods

Phase 5: Operationalize across your credit process

Once validated, consensus data should flow into every credit decision point.

Once validated, consensus data should flow into every credit decision point.

Loan origination: Underwriting new credits, analysts check consensus PD estimates as standard review. If the consensus view shows an elevated risk initial assessment missed, investigate further or price accordingly.

Annual reviews: For existing borrowers, consensus data provides an external benchmark on credit quality. If a borrower’s consensus credit rating deteriorates between annual reviews, trigger early review or adjust monitoring intensity.

Portfolio monitoring: Risk managers track consensus rating migrations across portfolios. Clusters of downgrades in particular industries or regions signal an early warning to tighten underwriting standards or reduce concentration before losses materialize.

Provisioning and capital calculations: Point-in-time PD curves feed directly into IFRS 9 expected loss calculations and Basel capital models. Weekly updates mean provisions reflect current conditions, not stale quarterly estimates. Credit transition matrices provide forward-looking migration probabilities required for lifetime ECL.

Stress testing: Credit Risk Correlation Matrices support scenario analysis. When modeling recession scenarios or industry-specific shocks, correlations show how different portfolio segments behave together under stress.

Factors that Delay PD Model Implementation (And How to Avoid Them)

Consensus data implementation differs from traditional rating agency integrations in ways that catch even experienced risk teams off guard.

Attempting full integration simultaneously

Consensus data serves multiple use cases—provisioning, stress testing, portfolio monitoring, and originations. Start with one high-impact application, typically IFRS 9 provisioning or unrated entity coverage. Enterprise-wide deployment from day one creates months of data mapping work with no immediate value demonstration.

Interpreting consensus data like static benchmarks

Consensus data refreshes weekly, providing early warning signals that complement slower-moving benchmarks. Train analysts on interpreting weekly fluctuations and distinguishing genuine credit deterioration from normal volatility. Weekly movement doesn’t necessarily indicate material change—trend analysis matters more than single-period shifts.

Skipping parallel validation

Auditors want performance evidence against your actual portfolio. Backtest consensus PDs against historical defaults during the pilot phase. Six months of operational evidence showing enhanced predictive accuracy make audit conversations straightforward. “We think this works” fails. “Here’s backtest proof it caught 73% of our 2022-2023 defaults 6+ months early” succeeds.

Underestimating the calibration period

Senior analysts need to see how consensus data strengthens existing processes, not replaces them. Show specific cases where it provided early warnings on monitored credits or filled coverage gaps on unrated entities. Position as an additional data point complementing their expertise. Analysts who view it as questioning their judgment resist adoption.

Assuming standard API integration

Traditional rating feeds map entity IDs to grades. Consensus data includes PD curves, transition matrices, and correlation matrices flowing to different systems. Involve data teams early to map integration points and avoid underestimating the architecture work required. What looks like a simple API connection on the requirements doc becomes a three-month IT project if loan origination systems run on legacy infrastructure.

Case Study: How a $150B Bank Used Credit Benchmark to Recalibrate Credit Models

A top-tier US bank with approximately $150 billion in assets needed external validation to pressure-test internal credit models. Their Chief Credit Officer required peer-driven insights to determine if risk assessments were appropriately calibrated, particularly for entities without traditional ratings. Without an outside perspective, they couldn’t confidently validate credit views.

The bank embedded Credit Benchmark’s consensus data directly into credit models as a validation layer alongside internal estimates and agency ratings, using it to recalibrate PDs and LGDs while leveraging peer insights for Shared National Credit exam preparation.

“Credit Benchmark has given us a clearer lens into how our peers assess credit risk, especially for unrated names,” the Chief Credit Officer noted. “The ability to benchmark our internal views has significantly increased our confidence in risk decisions, and it’s directly informed model recalibration efforts.”

Measurable outcomes:

- Improved model accuracy by pressure-testing against market consensus and aligning with peer views

- For unrated entities where traditional validation methods weren’t available, consensus data provided external benchmarking

- Validated internal assessments against anonymized peer views with confidence

Build a Probability of Default Model That Passes Audits Today

Credit institutions thriving through the next decade won’t be those with the largest quant teams or most sophisticated proprietary models. They’ll be those recognizing consensus data as infrastructure, not nice-to-have.

With Credit Benchmark’s consensus-based data from 40+ global banks, you get coverage of 120,000+ entities, weekly updates catching deteriorating credits early, and regulatory transparency passing audits. Implementation takes 90 days, not two years.

Institutions embedding this intelligence into credit infrastructure will enter the next credit cycle with clearer visibility, tighter risk controls, and capital deployed where it actually earns returns.

Book a demo today to see how Credit Benchmark covers your portfolio

Essential Things To Know About Probability of Default Model (FAQs)

What differentiates consensus-based PD estimates from traditional approaches?

Consensus PDs aggregate forward-looking credit assessments of 40+ banks actively lending to each counterparty. Unlike through-the-cycle agency ratings or single-institution internal models, consensus estimates reflect:

- Current market conditions across multiple credit committees

- Real lending decisions from institutions with actual exposure

- Weekly recalibration based on emerging risk signals

This makes consensus PDs particularly valuable for validating internal estimates, filling coverage gaps for unrated entities, and satisfying supervisory expectations for external benchmarking under Basel IV and IFRS 9.

What makes consensus PDs suitable for regulatory capital validation?

Contributing institutions use consensus credit rating data within their own Basel-approved internal models. Each bank’s regulatory authority has validated its credit assessment frameworks under BCBS guidelines. When you benchmark against consensus views, you’re aligning with 40+ independently validated approaches—not a single vendor’s proprietary model.

This matters for model risk management because:

- Supervisors can’t dismiss peer institution alignment as ‘model risk’

- Audit trails show external validation from institutions under identical or comparable regulatory frameworks

- Methodology documentation meets ECB TRIM requirements for third-party data transparency

Consensus PDs also provide point-in-time estimates satisfying IFRS 9’s forward-looking requirements, unlike through-the-cycle agency ratings that smooth volatility across cycles.

What are the types of probability of default models

Four main types of probability of default models exist:

Statistical Models use historical default data and borrower characteristics to predict default probability through techniques like logistic regression, discriminant analysis, or credit scoring. These identify statistical relationships between financial metrics and default outcomes.

Structural Models based on Merton’s framework treat company equity as a call option on assets. Default occurs when asset value falls below debt obligations. These models use market data like equity prices and volatility to estimate default probability, but require public company data.

Machine Learning Models employ algorithms like random forests, neural networks, or gradient boosting to identify complex, non-linear patterns in large datasets. These processes involve more variables and interactions than traditional statistical approaches, but create explainability challenges for regulatory validation.

Reduced-Form Models model default as a random event with intensity determined by observable factors. These focus on when default might occur rather than why, often using credit spreads and market data.

Some institutions now use specialized frameworks like CreditMetrics (which considers credit risk impact on portfolio value) or CreditRisk+ (which focuses on calculating unexpected loss related to default probability).